Please select your location and preferred language where available.

KumoScale™ Software

- IMPORTANT NOTICE -

Thank you for interest in KumoScale™ software ("Product"). There is no plan for enhancement beyond Version 3.22 as the Product has transitioned to maintenance only, and no new evaluation or production licenses will be granted. If you have any questions, please contact us here.

Disaggregated NVMe-oF™ Storage Management for Data Centers

KumoScale™ software implements the NVMe™ over Fabrics (NVMe-oF™). By which the product provides a fast, networked block storage service between initiator and target through high speed network connection.The software runs on storage node populated with NVMe™ SSD and realizes storage disaggregation. KumoScale software supports both RDMA and NVMe over TCP for network transport and that provides shared, clustered storage pool to enable logical split by NVMe namespace on a bunch of SSDs. Comparing to Direct Attached Storage(DAS), KumoScale volume management capability gives much flexibility to control big storage pool that consists of large individual SSDs.

-

27-07-2022

Use Case

Typical application and use case

- High performance storage service for cloud-native application

- Persistent storage service for OpenStack™ and Kubernetes®

- High speed block storage service for HPC applications and other scentific application

- Backend storage for AI/ML/DL applications

KumoScale™ software should work with generally available NVMe-oF™ initiator software as well as standard based NVMe SSDs. Proprietary software driver or hardware are not necessary.

Function

Improve storage utilization and better flexibility to manage pooled fast NVMe™ SSD

Capacity and performance of latest PCIe® Gen 4 NVMe SSD is exploded than that of PCIe Gen 3 and are far more than a single compute node can consume. Sharing SSDs over different user applications and servers/VMs are an urgent need. Until NVMe-oF™ is introduced and widely spread, networked storage has been either slow or expensive, or both. It is no longer true with NVMe-oF™. KumoScale™ software increases storage utilization by sharing SSD, dividing shared SSD by NVMe name space and adds more flexibility to provision the right amount of capacity from the pool through the software at the performance of NVMe™ SSD.

Supports RDMA and NVMe over TCP protocol

KumoScale software supports RDMA and TCP transport protocol. If you seek faster and low latency storage service by KumoScale software, RoCEv2(RDMA over Converged Ethenet) should fit. On the other hand if you prefer network compatibility in existing data center network, TCP/IP could be used for KumoScale software. For network card validated with latest KumoScale software please refer to HCL(Hardware Compatibility List).

Adapting customer provisioning and telemetry architecture

- For OpenStack®, KumoScale™ software provides OpenStack Cynder® driver supporting Wallaby release and beyond.

- For Kubernetes® ,containerized applications are connected to KumoScale™ storage node by KumoScale Container Storage Interface (CSI) driver.

- For baremetal environment, Ansible™ playbooks or other popular automation tools may be used for deployment automation and provisioning of storage to compute nodes.

- For telemetry integration, KumoScale™ software provides interface to enable push/pull data exchange for existing telemetry (time series metrics) and logging (asynchronous events) infrastructure.

Data Protection and High Availability

Data Protection -Cross Domain Data Replication (CDDR)-

KumoScale™ software provides data protection via a technique called Cross Domain Data Replication (CDDR). CDDR creates multiple replicas of a logical volume on top of pooled SSDs, and maps them to storage nodes located in different failure domains. The replication is based on initiator and replica can be placed across 2nd or 3rd KumoScale™ storage node. For replication KumoScale agent runs on initiator to check volume availability. Once the agent detects volume falure whatever reasons, the volume is automatically reconnected to replicated volume on different storage node. After resilient volume comes back from the failure, all data is rebuild to fresh resilient volume automatically.

Ensure High Availability

KumoScale™ storage node supports L3 BGP (Border Gate Protocol) so that network path is automatically rerouted to available network path based on predefined optimal routing table when there is network connection issue.

V3.22 Highlight

Volume Migration:

Enhanced volume migration allows an administrator to migrate a volume across storage nodes without disruption for maintenance and utilization. Volumes remain online and continue to serve I/O during the process of moving the data to its new location. The operation is completely transparent to the applications using the volume, enhancing storage cluster resilience.

Cluster Manager CLI v2:

An enhanced Cluster Manager CLI extends the 3.21 CLI v1 to add operator-driven lifecycle automation capabilities available in the KumoScale™ control plane. The 3.22 Cluster Manager CLI now gives administrators full access to both cluster services management and storage provisioning functions via a single, intuitive CLI.

Flexible Volume Class:

A new flexible volume class allows data center infrastructure providers to start their tenants with a non-replicated volume and later upsell them to a resilient replicated volume. It allows data center infrastructure providers to deploy their tenants quickly and add appropriate resiliency in later phase.

KumoScale™ software version 3.22 also includes an improved online technical documentation, Ansible® refactoring for easier storage administrator script adaptation, and modifiable cluster VIP address.

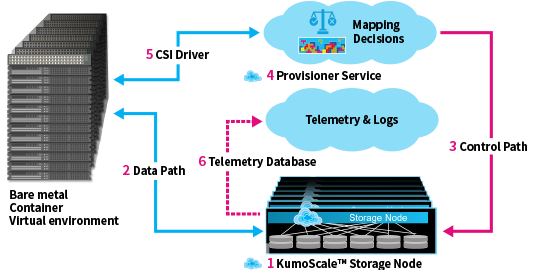

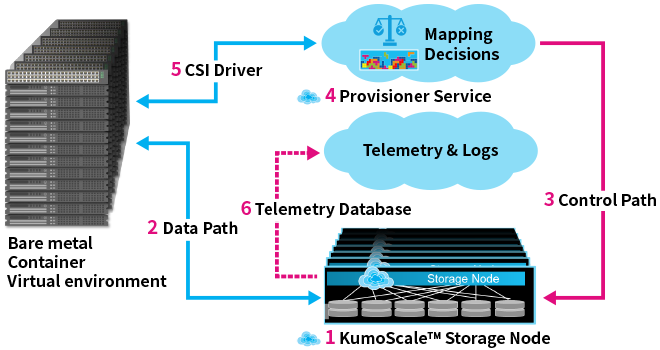

Architecture

Data Center System Architecture

Functions (Modules)

1. KumoScale™ Storage Node

Storage Server that runs KumoScale™ Software

2. Data Path

Network data path to connect between server application and storage via NVMe-oF

3. Control Path

Network control path to manage internal data services provided by KumoScale.

4. Provisioner Service

Carve out appropriate storage capacity and provision preferred QoS from storage node by predefined parameters.

5. CSI Driver

Container Storage Interface drive for KumoScale. Tightly coupled with CSI driver.

6. Telemetry Database

Database to store variety of statistics information in server and KumoScale storage node.

Platform Requirements

KumoScale™software has been tested on both bare metal, OpenStack and Kubernetes® deployments on a wide range of industry standard servers. Any NVMe-oF™ compliant initiator can be used, including Linux® kernel version 4.8 or later. Supported platform is show below.

|

Components |

Minimal Requirement |

|---|---|

|

Memory |

64GB DDR4 |

|

System Disk |

2 x 128 GB SATA DOM |

|

NIC |

|

|

Power Supply |

Dual power supply, hot swappable |

|

Management Interface |

A dedicated management port is optional. KumoScale™ can use the data port for management traffic, or it can utilize a dedicated management port. |

|

KumoScale™ Provisioner |

KumoScale™ Provisioner does not require any additional servers nor a container. |

V3.22 User Manual

The KumoScale V3.22 User Manual includes:

- Hardware Compatibility List (HCL)

- Which KumoScale Deployment Mode Should I Use?

- Release Notes

- Installation Guide for Appliance Mode

- Installation Guide for Managed Mode with Kubernetes

- NVMe™ Host Patch

- User Guide

- Cluster Manager CLI

- Kubernetes™ CSI Driver Guide

- Openstack™ User Guide

- Provisioner REST API Guide

- KumoScale Metric Collection

- Grafana™ Dashboard Guide

- Ansible™ User Guide

Documents

Inquiries

Please contact us from Inquiry form.

- Before submitting an inquiry, please carefully read the important considerations and accept it, then select "KumoScale" in the "Products" drop-down menu of the inquiry form.

Press Release

-

27-07-2022

-

13-04-2022

-

22-06-2021

- : 15us latency addition at 4KB read compared to DAS. Measured by KIOXIA in June 2020 using measurement tool specified by KIOXIA. 15us is the difference of 4KB read latency between DAS and NVMe-oF™ storage configuration on the same hardware.

- NVMe and NVMe-oF are registered or unregistered marks of NVM Express, Inc. in the United States and other countries.

- PCIe is a registered trademark of PCI-SIG.

- Kubernetes is a registered trademark of The Linux Foundation in the United States and/or other countries.

- Ansible is a registered trademark of Red Hat, Inc. in the United States and other countries.

- Linux is a registered trademark of Linus Torvalds in the U.S. and other countries.

- The OpenStack Word Mark is a registered trademark of the OpenStack Foundation, in the United States and other countries and are used with the OpenStack Foundation's permission. We are not affiliated with, endorsed or sponsored by the OpenStack Foundation, or the OpenStack community.

- Prometheus is a registered trademark of The Linux Foundation.

- Grafana and Loki are trademarks or registered trademarks of Grafana Labs, Inc.

- Intel is a trademark of Intel Corporation or its subsidiaries.

- All other company names, product names, and service names mentioned herein may be trademarks of their respective companies.